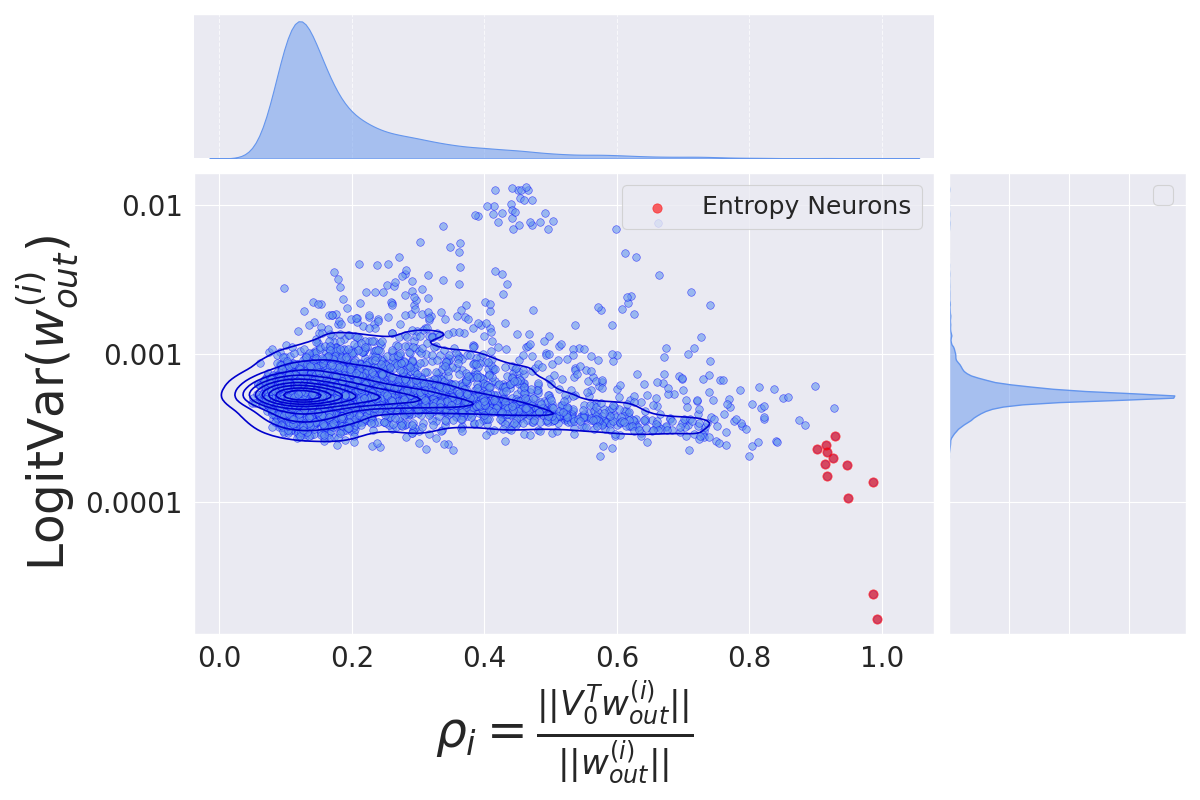

LogitVar

This measure quantifies a neuron's direct effect on output logits variance. For a neuron $i$, it is defined as:

$$

\mathrm{LogitVar}(w_{\mathrm{out}}^{(i)}) = \textbf{Var}\left\{

\frac{

{w}^{(t)}_\mathrm{U}

\cdot

w_{\mathrm{out}}^{(i)}

}{

||{w}^{(t)}_{\mathrm{U}}||

\times

||w_{\mathrm{out}}^{(i)}||

}

;

t \in V

\right\}

$$

where $V$ is the set of tokens in the vocabulary and $w_U^{(t)}$ is the $t$-th row of $W_U$.

Effective Null Space Projection (ρ)

This measure quantifies how much of a neuron's output aligns with directions that minimally impact the model's final output, forming the effective null space of the unembedding matrix $W_U$, denoted as $V_0$. For a neuron $i$, it is defined as:

$$

\rho_i = \frac{||\mathbf{V}_\mathrm{0}^\mathrm{T} w_{\mathrm{out}}^{\mathrm{(i)}}||}{||w_{\mathrm{out}}^{\mathrm{(i)}}||}.

$$